Part 3.2: Managing the data transformation pipeline

Tutorial

advanced

+5XP

25 mins

(137)

Unity Technologies

In this section of the DOTS Best Practices guide, you will:

- Learn how to create and organize your systems to build an efficient pipeline to transform your input data into the output data your project needs.

- Learn about the performance implications of structural changes and how to mitigate the impact.

Resources

Languages available:

1. Control system ordering

Every frame in an ECS project is similar to a data transformation pipeline: the frame starts with a collection of data that represents the simulation state at time T (including user input), and to process that data, systems launch jobs to produce an output: the simulation state at time T+1. Effectively managing that pipeline means controlling which systems operate on the data, and in which order.

ECS provides a number of default ComponentSystemGroups that update at different points during the update loop. By default, when you create a new system, ECS updates it within the SimulationSystemGroup, and Unity arbitrarily (but deterministically) decides at what point in the SimulationSystemGroup update to update your system. See the Entities package documentation on the Update Order of Systems for more details.

To get more control over this process, you should create your own ComponentSystemGroups and nest them within the SimulationSystemGroup. In each system you create, use the UpdateInGroup attribute to specify which ComponentSystemGroup it’s a part of. Each system should also control how it should be ordered in that group relative to other systems, using the [UpdateBefore] and/or [UpdateAfter] attributes.

Here’s how that might look for some of the systems used to control a character in a game. First, the code reads and processes inputs early in the frame. Next, a character state machine uses those inputs to update the character’s state in the simulation. Finally, the character updates its animations to reflect the updated character state. This results in behavior which feels responsive to the player, because the inputs affect the character state and trigger animations all within a single frame. In this example, the system update orders are specified using [UpdateAfter].

public partial struct InputSystem : ISystem

{

// ...

}

[UpdateAfter(typeof(InputSystem))]

public partial struct StateMachineSystem : ISystem

{

// ...

}

[UpdateAfter(typeof(StateMachineSystem))]

public partial struct AnimationSystem : ISystem

{

// ...

}2. Group sync points together, and schedule as much work as you can

To maximize efficient usage of modern, multi-core CPUs, you need to move as much work as possible off of the main thread. Unity uses the job system to do this.

There are three ways you can schedule a job to run:

- ScheduleParallel()

- Schedule()

- Run()

ScheduleParallel() executes any job or Entities.ForEach(), and allows the work to be divided across all available CPU cores. However, this might not always be possible: certain algorithms, data structures, or memory access patterns don’t lend themselves well to being safely parallelized.

If you cannot use ScheduleParallel(), use Schedule() to schedule the work instead. This executes the work on a single thread - but not the main thread (unless the main thread is blocked waiting for a job dependency chain to complete). However, this might not be possible if, for example, the work involves accessing managed objects.

The final option is Run(), which you should only use if you cannot use ScheduleParallel() or Schedule(). When you use Run() to run a job, it blocks the main thread, to allow the job system to forcibly complete any dependencies that have already been scheduled. Only then does the job scheduler execute the job, synchronously on the main thread. Times in the frame when the job scheduler forcibly completes scheduled jobs are known as sync points, and they can cripple performance if you don’t manage them properly.

The main cause of sync points is structural changes within the ECS chunk data. A structural change in ECS is:

- Any change to the layout of entity components inside the chunk data

- Creating or destroying entities

- Adding or removing components

- Changing the value of shared components

Because structural changes must happen on the main thread to avoid race conditions, attempting to enact structural changes via EntityManager is also a common reason that the job system might only allow a job to be executed using Run(). Thankfully, you can use Entity Command Buffers to mitigate this problem. Entity Command Buffers queue up requests for structural changes to be executed all together by an EntityCommandBufferSystem at a pre-specified point later in the frame. EndSimulationEntityCommandBufferSystem is a commonly-used built-in sync point.

Use SystemGroups to group main thread work

Other systems might occasionally need to do main thread work, perhaps due to interacting with managed data. For instance, many applications might want to read certain data from the traditional OOP objects such as GameObjects and MonoBehaviours, perform some processing or simulation in DOTS, then copy the results back into the GameObject representations. You should group these main-thread systems together into the smallest possible number of SystemGroups to avoid introducing new sync points part way through the SimulationSystemGroup. The following code demonstrates how to declare ComponentSystemGroups and systems which separate managed data processes from unmanaged data processes.

using Unity.Entities;

// A group that runs right at the very beginning of SimulationSystemGroup

[UpdateInGroup(typeof(SimulationSystemGroup), OrderFirst = true)]

[UpdateBefore(typeof(BeginSimulationEntityCommandBufferSystem))]

public partial class PreSimulationSystemGroup : ComponentSystemGroup { }

// A group that runs right at the very end of SimulationSystemGroup

[UpdateInGroup(typeof(SimulationSystemGroup), OrderLast = true)]

[UpdateAfter(typeof(EndSimulationEntityCommandBufferSystem))]

public partial class PostSimulationSystemGroup : ComponentSystemGroup { }

[UpdateInGroup(typeof(PreSimulationSystemGroup))]

public partial class CopyManagedDataToECSSystem : SystemBase

{

// Copy data from managed objects (such as MonoBehaviours) into components

protected override void OnUpdate() { }

}

[UpdateInGroup(typeof(SimulationSystemGroup))]

public partial struct ProcessDataSystem : ISystem

{

// Process the ECS simulation.

public void OnUpdate(ref SystemState state) { }

}

[UpdateInGroup(typeof(PostSimulationSystemGroup))]

public partial struct CopyECSToManagedDataSystem : ISystem

{

// Copy processed data from components back into managed objects

public void OnUpdate(ref SystemState state) { }

} In the next section, let’s look at a more concrete example of how you might use this technique.

3. Separate HPC# from C#

As discussed in the earlier Data Design section, you should aim to have as much of your data blittable and Burstable as possible. You should also adhere to the other restrictions that allow you to write High Performance C# (HPC#). For more information, see the C# Language Support section of the Burst package documentation.

If your project makes use of any code that involves MonoBehaviours, then it also deals with reference types and standard C#. You should aim to keep these C# sections as self-contained and separate as possible from your DOTS code. If you mix DOTS code with reference types and standard C#, you cannot Burst compile and schedule jobs in those systems.

You can declare a class with an IComponentData interface to produce a managed IComponentData, which is a component that behaves like a standard ECS component in some limited ways. It is useful to use managed IComponentData as a temporary measure to help the migration process if you’re migrating from managed code to ECS. If you’re not using this feature, add UNITY_DISABLE_MANAGED_COMPONENTS to the Scripting Defines in your project’s Player Settings to stop anyone from accidentally declaring an IComponentData as a class.

Let’s look at an example. Here, the data we’re transforming is in a component called AnimAIState, which contains an enum value indicating an AI character’s current activity, and a reference to an Animator component on a GameObject which animates and renders the character. A component system runs some complicated state machine logic (not shown) every frame to work out what activity the character should be performing, and then updates a variable in the Animator Controller to play the animation that matches the activity.

public enum CharacterActivity { Idle, Run, Jump, Shoot }

public class AnimAIState : IComponentData

{

public Animator Animator; // An Animator component on a GameObject

public CharacterActivity Activity;

}

public partial struct AnimAISystem : ISystem

{

public void OnUpdate(ref SystemState state)

{

foreach (var animAIState in SystemAPI.Query<AnimAIState>())

{

animAIState.Activity = SomeComplexStateMachineLogic();

animAIState.Animator.SetInteger("State", (int)animAIState.Activity);

}

}

}At first glance, this seems nice and straightforward. However, that Animator is causing a problem. Because it’s a reference to a managed object, the component is not blittable, so it must be declared as a managed IComponentData; a class rather than a struct. This in turn means that it must be processed on the main thread in a foreach rather than in a job, and that the OnUpdate() method of this system cannot have the [BurstCompile] attribute applied to it.

As a result, even though the complex state machine logic is otherwise written as pure HPC#, it can’t take advantage of Burst and the job system can’t run it anywhere except on the main thread. Interacting with the Animator component is always going to be slow because it’s a managed object, but if you rearrange the code so that the components and systems are split up and treated separately, you can improve the state machine logic performance.

public partial class AnimatorRef : IComponentData { public Animator Animator; }

public partial struct AIState : IComponentData { public CharacterActivity Activity; };

[BurstCompile]

public partial struct AIStateJob : IJobEntity

{

public void Execute(ref AIState aiState)

{

aiState.Activity = SomeComplexStateMachineLogic();

}

}

[UpdateInGroup(typeof(SimulationSystemGroup))]

public partial struct AIStateSystem : ISystem

{

[BurstCompile]

public void OnUpdate(ref SystemState state)

{

new AIStateJob().ScheduleParallel();

}

}

[UpdateInGroup(typeof(PostSimulationSystemGroup))]

public partial struct AnimatorRefSystem : ISystem

{

public void OnUpdate(ref SystemState state)

{

foreach (var (AIState, animatorRef) in SystemAPI.Query<RefRO<AIState>, AnimatorRef>())

{

animatorRef.Animator.SetInteger("State", (int)AIState.ValueRO.Activity);

}

}

}Note that this code uses the PostSimulationSystemGroup from the previous section of this guide, and ensures that the AnimatorRefSystem updates as a part of it. This is because the foreach in AnimatorRefSystem must run on the main thread, but should only run after the job scheduled in AIStateSystem has completed. Currently the best way to express this dependency between a scheduled job and a main thread job that must run after it is to use a sync point to ensure that the scheduled job has completed. In this example, the systems are using EndSimulationEntityCommandBufferSystem.

Because AIState no longer contains an Animator reference, AIStateSystem is free to use Burst compilation in both its OnUpdate() method and in the AIStateJob that it schedules, and can schedule the work to parallelize across the available worker threads. This should mean that the state machine logic takes considerably less time to execute.

4. Beware of structural changes

Structural changes might cause sync points that harm performance. There are also other CPU tasks that ECS must perform during structural changes, which can also impact performance. Let’s say you have an entity with two components called A and B, and you want to add a third component, C.

// create archetype and entity in a Burst-friendly way

var abComponents = new FixedList128Bytes<ComponentType>

{

ComponentType.ReadWrite<A>(),

ComponentType.ReadWrite<B>(),

}.ToNativeArray(state.WorldUpdateAllocator);

var abArchetype = state.EntityManager.CreateArchetype(abComponents);

var entity = state.EntityManager.CreateEntity(abArchetype);

// ... Some time later...

state.EntityManager.AddComponent<C>(entity);Adding a component to an entity triggers a process inside ECS which looks something like this:

- If we add C to an entity of archetype AB, we’ll end up with an entity of archetype ABC. So first, check whether an EntityArchetype for ABC already exists.

- If the EntityArchetype doesn’t already exist, create it

- Archetype ABC contains a list of pointers to chunks. Check to see if any of these chunks have space for a new entity.

- Allocate a new chunk if necessary

- Memcpy() components A and B from our original entity into the new chunk

- Create or copy a component C into the new chunk as well

- Update the EntityManager so that the entity which previously pointed to an index in an AB chunk now points to our new index in the ABC chunk.

- Remove the original entity from the AB chunk using swap_back()

- If the original entity was the only one in the chunk, free the chunk memory now that it’s empty

- If it wasn’t the last entity in the chunk, swap_back has just changed the index of one of the other entities in this chunk. So, update EntityManager again so that the entity we just moved maps to the new index

- If a new chunk has been allocated, or a chunk has been deallocated, clear the cached list of chunks for every EntityQuery that involves that chunk’s archetype. The EntityQuery recalculates the list of chunks it refers to the next time it executes

None of these steps is particularly slow on its own, but if you add them all together and then multiply by thousands of entities changing archetypes in a single frame, you can end up with a pretty significant performance impact. You’ll notice that parts of this processing cost also scale up in relation to the number of EntityArchetypes and EntityQueries that have been declared at runtime.

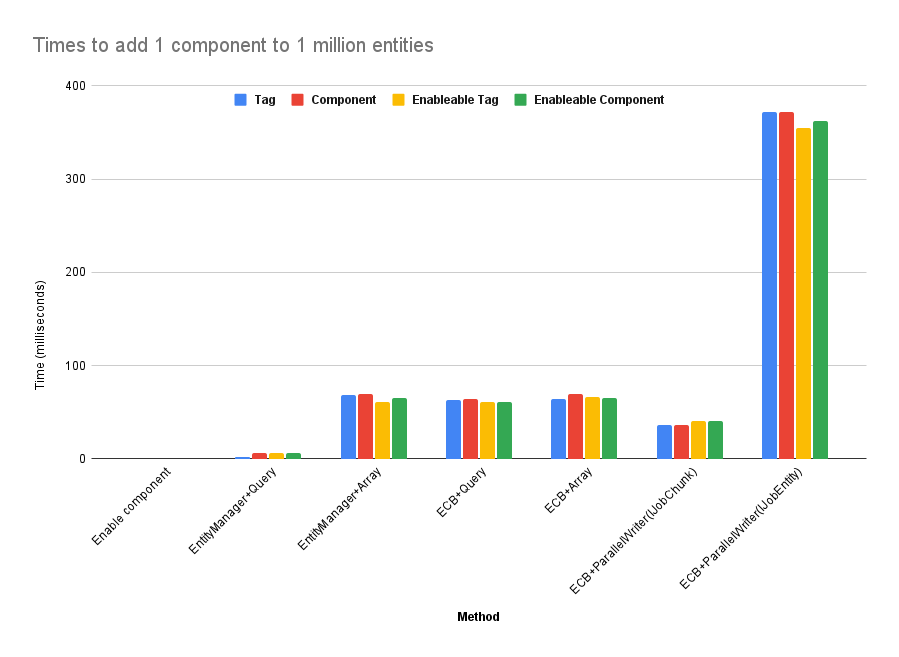

In fact, the performance impact of structural changes depends on a number of factors. Here’s a graph showing the median time (in milliseconds) on the main thread it takes to add a component to a million entities using various methods. These were carried out on a 2019 MacBook Pro, 2.4 GHz 8-Core Intel Core i9 CPU. Timings differ on different CPUs, but these times clearly indicate that different approaches can result in wildly different performance characteristics.

You can see a copy of the data table used to produce this graph, with example code snippets to illustrate each of the techniques in this PDF.

“Tag” simply means “a component that contains no data”. “Component” means “a component that contains data”. Here’s how to interpret the shorthand summaries of the methods shown in the graph:

- Enable Component: don’t add any components at all. Simply enable a component that implements IEnableable and which was previously disabled.

- EntityManager+Query: Pass an EntityQuery to the EntityManager to immediately add components in bulk on the main thread

- EntityManager+Array: Pass a NativeArray<Entity> to the EntityManager to immediately add components on the main thread

- ECB+Query: Pass an EntityQuery to an EntityCommandBuffer on the main thread to queue components to add, then execute that EntityCommandBuffer (time includes the ECB execution time)

- ECB+Array: Pass a NativeArray<Entity> to an EntityCommandBuffer on the main thread to queue components to add, then execute that EntityCommandBuffer (time includes the ECB execution time)

- ECB+ParallelWriter(IJobChunk): Use an IJobChunk across multiple worker threads to pass a NativeArray<Entity> per chunk to an EntityCommandBuffer, then execute that EntityCommandBuffer (time includes the ECB execution time)

- ECB+ParallelWriter(IJobEntity): Use an IJobEntity across multiple worker threads to pass instructions to add components to entities one at a time to an EntityCommandBuffer, then execute that EntityCommandBuffer (time includes the ECB execution time)

This graph reveals a few guidelines to consider when writing code that introduces structural changes, as explained below.

Prefer enableable components to structural changes

Components must implement the IEnableable interface in order to be enableable. Enableable components come with some disadvantages, but the advantages are clear. The graph doesn’t have enough resolution to show the CPU cost of enabling a million components, but it’s around 0.02 milliseconds: that’s over 300x faster than the fastest structural change, and over 18000x faster than the slowest!

For this reason, enabling and disabling components should be the first choice for dynamically changing an entity’s behavior rather than adding or removing components, particularly if the planned changes are frequent.

When IEnableableComponent is not the answer

Enableable components have some downsides, which may impact whether or not it makes sense to use them in some cases.

Enableable components can affect the performance of jobs and systems that access the archetypes that contain them. If a query specifies that a job should include all enabled instances of a particular enableable component and all the components of that type within a chunk are disabled, the chunk is skipped. If all the components in the chunk are enabled, the job can iterate efficiently over the entities in the chunk, allowing Burst to process multiple components simultaneously using SIMD intrinsics where possible. However, in chunks that contain a mix of enabled and disabled components, each component’s flag must be checked before deciding whether or not it should be processed. These additional checks create branches and interfere with Burst’s ability to vectorize code. A worst-case performance scenario is if the components in a chunk alternate between being enabled and being disabled. In such cases, processing that chunk might take around 2x as long as processing a chunk in which all the components are enabled.

Enableable components can have an impact on other structural changes in the project. See the following section for more details.

Enableable components have a memory cost. Entities with many enableable components take up more space within a chunk. Being able to fit fewer entities into a chunk can mean an increase in the overall runtime memory usage due to needing more chunks to store all the entities, which in turn can contribute to chunk fragmentation. See the “Understand chunks” section of this guide for more information.

In addition to the runtime memory costs, entities which make extensive use of enableable components and which are serialized (in prefabs or subscenes) occupy extra storage space on disk, which can impact the time it takes to load the data.

For these reasons, in cases where entity changes are infrequent, or if chunk fragmentation or efficient Burst vectorization and CPU cache usage when processing the component data are a concern, adding and removing components may still be the best option.

Prefer using EntityManager and EntityQuery to make changes where possible

Passing an EntityQuery to an EntityManager method is by far the most efficient way to make structural changes. This is because the method can operate on whole chunks rather than individual Entities.

One thing that can affect this performance is the presence of enableable components on the target entities. Consider the following code:

var query = SystemAPI.QueryBuilder().WithAll<Foo>().Build();

state.EntityManager.AddComponent<Bar>(query);If Foo is not an IEnableable component, this structural change will be relatively fast - on our 2019 MacBook Pro it takes a little over 6ms to add Bar to a million entities that match the query. However, if Foo is an enableable component, adding Bar takes over 60ms - 10x longer. This makes the performance closer to what would be expected from passing the EntityManager a NativeArray<Entity>. Here’s why:

If Foo is not enableable, the WithAll<Foo>() part of the query means something like “find all the chunks used by all the archetypes that contain a Foo and apply the structural change to each of those chunks as a whole”. If Foo is enableable, then WithAll<Foo>() means something more like “find all the chunks used by all the archetypes that contain a Foo, check the enabled flags for Foo in each of those chunks, build a list of the entities for which Foo is enabled, and then visit those entities one at a time to make structural changes”.

Build NativeArray<Entity> in a chunk-friendly way

If you need to build a NativeArray of entities to apply a structural change to, try to ensure that the entity order in the array matches the order of the entities in memory. The simplest way to do this is with an IJobChunk which can iterate over the chunks matching your target query. The job can iterate over the entities in the chunk in order and build a NativeArray of the entities that the change should be applied to. This NativeArray can be passed to an EntityCommandBuffer.ParallelWriter to queue up the required changes. When the EntityCommandBuffer is executed, entities will be accessed one by one via lookups to the EntityManager which will inevitably involve indirection, but because the entities will be accessed in order, the process will increase the chances of CPU cache hits.

Understand how EntityQuery and EntityCommandBuffer interact

When an EntityQuery is passed to an EntityManager method, the method operates on whole chunks rather than individual Entities. You might expect this to also be the case when passing an EntityQuery to an EntityCommandBuffer method, but our graph clearly shows that the performance in this case is closer to the performance we see when working with NativeArray<Entity> rather than EntityQuery.

Between the time a command is added to the EntityCommandBuffer and the time when the buffer executes its commands, the content of the chunks might change as a result of other structural changes. The EntityCommandBuffer mitigates this potential problem by evaluating the results of the EntityQuery at the time the command is enqueued and storing it internally in a NativeArray<Entity>, rather than storing the EntityQuery and evaluating it when the buffer is executed. This results in poorer performance but more predictable behavior.

When making structural changes using an EntityQuery, use the query in conjunction with an EntityManager rather than an EntityCommandBuffer, if possible.

Consider when and how to make structural changes

If EntityQueries passed to an EntityCommandBuffer are so much slower than when used with EntityManager, why use EntityCommandBuffer at all?

Well, unlike in our simple test project, actual production code often combines the need for structural changes with computation and data processing work which benefits from being scheduled and possibly parallelized. The job system doesn’t allow you to schedule or parallelize jobs that use EntityManager, or to execute them anywhere other than the main thread. However, you can add commands to an EntityCommandBuffer from scheduled and parallelized jobs, because no structural changes occur until the EntityCommandBuffer is played back.

Another reason to use EntityCommandBuffer is that playback can be scheduled to occur during pre-existing sync points in the frame, whereas EntityManager will create a new sync point every time it makes a structural change. The added CPU overhead of using an EntityCommandBuffer may be a price worth paying in order to avoid introducing a new sync point.

EntityManager can be useful in some circumstances when you require a structural change to influence an EntityQuery running later in the same frame, although you can often avoid the need for structural changes in the middle of a SystemGroup by controlling system update ordering. By doing so, you can ensure that the structural change and the EntityQuery that relies on the change are separated by a pre-existing sync point (see section Separate HPC# from C#).

The best way to decide whether EntityManager or EntityCommandBuffer is right for any given structural change is to examine the Systems Window and the Unity Profiler’s Timeline View to get a sense of what’s happening on the main and worker threads at the time the change is required.

Enable/disable systems to avoid structural changes

If you want to stop a system from processing every entity that matches its EntityQuery, instead of removing some component from all of those entities, you can disable the system itself. The best way to do this is to call the SystemState’s RequireForUpdate() method in your system’s OnCreate() method. If the component you specify exists in the world, your system updates. If you delete the component, the system stops updating, and you only have to add or remove one component rather than potentially thousands.

Structural changes during entity creation

Another common mistake is constructing entities at runtime by adding one component at a time. For example, here’s how not to create an entity with 3 components called Foo, Bar and Baz:

// BAD!

var entity = state.EntityManager.CreateEntity();

state.EntityManager.AddComponent<Foo>(entity);

state.EntityManager.AddComponent<Bar>(entity);

state.EntityManager.AddComponent<Baz>(entity); Each of those calls to EntityManager.AddComponent() creates a new archetype and moves the entity into a whole new chunk. The archetype exists for the rest of the runtime of your application and will contribute to the cost of the necessary calculations any time a new EntityQuery needs to calculate which EntityArchetypes it references.

It’s much better to create the archetype that describes the entity you want to end up with and then create an entity directly from that archetype.

// Cache this archetype if we intend to use it again later

var newEntityArchetype = state.EntityManager.CreateArchetype(typeof(Foo), typeof(Bar), typeof(Baz));

var entity = EntityManager.CreateEntity(newEntityArchetype);

// Better yet, if you want to create lots of identical entities at the same time

var entities = new NativeArray<Entity>(10000, Allocator.Temp);

state.EntityManager.CreateEntity(newEntityArchetype, entities);Adding or removing multiple components simultaneously

If you need to add or remove more than one component to an entity (or a set of entities) at runtime, you can use the ComponentTypeSet struct to specify all of the components to be added or removed at once, which helps to minimize the number of structural changes and redundant archetypes. The struct can be passed to EntityManager methods such as:

- AddComponent(Entity, ComponentTypeSet)

- AddComponent(EntityQuery, ComponentTypeSet)

- AddComponent(SystemHandle, ComponentTypeSet)

As well as the equivalent RemoveComponent() methods.

Profiling structural changes

The Entities package contains a number of useful diagnostic tools for measuring the performance of ECS code. A particularly useful addition is the Structural Changes module for the Unity Profiler. It’s well worth spending some time learning to use this to monitor the impact of structural changes on your project’s runtime performance.

5. Consider job and system granularity

All code that is executed at runtime incurs a runtime cost. Systems provide a way to control the order of the parts of the data transformation and a powerful query system for selecting the data to transform. Jobs make it easy to write safe, deterministic multithreaded code. But systems and jobs themselves can add to runtime cost if used excessively, so it’s important to develop an understanding of how much work a system or a job should do in order to offset its cost, and how many of each of them should be used in your application.

The following sections discuss the reasons for some of these overheads and advice on how to mitigate them.

ScheduleParallel() overhead

You can reduce the cost of parallel scheduling by tuning the number of worker threads used by your application. Do this by setting JobUtility.JobWorkerCount so that your application uses enough worker threads to perform the work it requires without introducing CPU bottlenecks, but not so many worker threads that they spend a lot of time idle. In other words, in the Timeline view of the Unity Profiler’s CPU Module, make sure your worker threads are spending as much time as possible running jobs.

Job scheduling overhead

Regardless of whether a job is scheduled to run on a single thread or multiple threads, there is a small CPU cost for every job. Unity’s job scheduler has to manage jobs and dependencies in the job queue, and before a job can run, Unity must allocate thread memory and copy data so that the job has access to the data it needs to process. This CPU overhead is small - considerably less than the time it takes to spin up a dedicated thread in a multithreading system which is not based around jobs - but it is not zero. The overhead may become noticeable in applications that schedule a large number of jobs that run for a very short amount of time.

If the profiler shows that the main thread spends more CPU time scheduling a job than the job actually takes to execute, consider a way to increase the amount of useful work performed by the job. For example, if several jobs operate on similar sets of data, consider combining the jobs together into a single larger job. If a job is scheduled to run parallel across many worker threads, but the work performed by each thread is very small, consider scheduling the work to run on a single worker thread instead.

Resist the urge to combine too many jobs together into an “uber job” if the original jobs operate on different sets of data. If a job works with a very large set of disparate data, it could affect CPU cache line utilization, which could significantly reduce the performance of the job. The opposite is also true: If you have a number of jobs that operate on the same components, and if each of those jobs don’t have a specific dependency on a previous job, consider combining them into a single job that performs multiple operations on the same set of data. Do as much work as you can reasonably do on data whilst it’s in the cache.

Be wary of the temptation to simply move the work back to the main thread to avoid the overhead. If the main thread work happens before or after other scheduled jobs, running the work synchronously on the main thread may introduce a sync point. It might be more effective to incur the scheduling overhead of a small job just to avoid a sync point.

It makes sense to run a job synchronously on the main thread in these situations:

- When your application is targeting CPUs with a low number of CPU cores (3 or fewer)

- When the work can’t be reasonably combined with other jobs, and needs to be run during a sync point in the frame

If one of these situations applies, choose between running the job using Run() or avoiding the job system entirely, perhaps by simply using a foreach with a query. If you run the job on the main thread, it will still incur CPU overhead; the job dependency system will prevent the job from introducing race conditions with other jobs running on other threads. If the job safety system is not required for a particular piece of code that will run on the main thread, and if your OnUpdate() method relies on managed data that means you can’t simply [BurstCompile] the whole method, you can tell the Burst compiler to compile a method directly without having to create a job. See the Burst documentation page on Directly Calling Burst Compiled Code for more information.

Untangle job dependency chains

Jobs which are scheduled by systems depend on each other based on their execution order and the components they read and write. If one system schedules a job that writes a component, and a later system schedules a job that reads that component, the latter system jobs will depend on the Dependency handle of the former. Each system's Dependency handle represents the jobs they schedule. When a system schedules more than one job, their handles can either be chained to make a sequential execution order (which is the default behavior) or manually combined into a single handle using the JobHandle.CombineDependencies() method, which allows them to execute in parallel.

The problem is that this system dependency calculation approach can result in the job scheduler waiting for other jobs that write or read components that the job being scheduled doesn't actually use. For example, imagine a system called SystemA which schedules two jobs - Job1 that writes to ComponentA, and Job2 that writes to ComponentB. If we use the default chaining approach, then SystemA's Dependency handle will express a dependency on Job2, which in turns depends on Job1. Later, SystemB updates and schedules Job3 that reads ComponentA. SystemB will end up having to wait for both jobs scheduled by SystemA to complete, even though SystemB doesn’t need to access ComponentB at all.

To avoid this situation SystemA’s Job1 could be moved to SystemB. In this example, both Job1 and Job3 only need to access ComponentA. Grouping the jobs into the same system based on their component access patterns removes the dependence on Job2 and ComponentB without increasing the number of systems overall.

Long-running tasks

Performance problems can also occur at the other end of the job size spectrum. Sometimes it can be tempting to create very long-running jobs, perhaps even ones that are intended to run for multiple frames. One example of such a use case is procedural generation of new areas in a game environment. Unity’s job system is not designed with very long-running jobs in mind, and it’s all too easy to accidentally introduce a sync point that halts execution until the long-running job has completed, generating a very large CPU spike in the process.

There are two options for long-running jobs: either find a way to break the workload down into smaller, more granular sections that can fit comfortably between sync points in your frame, or don’t perform the work in a job at all. C# provides ways to create and manage your own custom threads for CPU-intensive tasks, and the async/await interface does the same for IO-bound tasks.

For more information, see the series of blog posts about C# Multithreading on the third-party site “Coding With Shadman”.

If you decide to create threads for long-running work, remember to reduce the JobWorkerCount accordingly to avoid thread contention. Also remember that, unlike jobs, any threads you create will not access data in a thread-safe way. When considering threads, ThreadPools or async tasks, consider how to structure your data to eliminate the possibility of race conditions.

Systems overhead

There is a fixed performance cost associated with every ECS system in a project. Systems are not free for a number of reasons:

- By default, every system you create will call its OnUpdate() method every frame, even if there are no entities that match the system’s queries.

- Systems can be instructed to only execute their OnUpdate() methods when there is data to process by adding the [RequireMatchingQueriesForUpdate] attribute to the system. Every system with this attribute performs a check every frame to see if any entities match any of the queries used by the system. Systems with matching entities run their update methods, and systems with none don’t update. The test is fast, but the time adds up in applications with a large number of systems.

- To iterate over the chunks that match an EntityQuery, each system accesses a structure called EntityTypeHandle. Because EntityTypeHandle structs are invalidated by structural changes, each system gets its own copy before each OnUpdate(), so the CPU cost of these accesses grows linearly with the number of active systems.

- Often, when scheduling or running a job, your code may need to get a ComponentLookup or a BufferLookup to pass to the job. An application with a lot of systems may end up creating and accessing these structures redundantly, multiple times in a frame.

- Every system contains one Dependency property. Before a system’s OnUpdate() method runs, this JobHandle represents the combined job dependencies of the systems that come earlier in the system update order. When the system schedules jobs, the JobHandle is updated to include these dependencies. After OnUpdate(), the JobHandle is passed to the next system in the pipeline. More systems in an application means more jobs and a more complex chain of JobHandle dependencies. If a system schedules multiple jobs, their JobHandles are automatically combined as a Dependency for the next system - so the job scheduler will complete all of the jobs in the first system before running the second system’s job, even though the second system might only have depended on one of those jobs.

Add the [RequireMatchingQueriesForUpdate] attribute to systems that might spend large parts of the runtime with no components to process. Avoid using it on systems that will run all or most of the time. For example, your PlayerCharacterSystem probably doesn’t need to check for matching queries because the player character exists all the time - except perhaps in the main menu where performance isn’t so important. Conversely, Level3TrapUpdateSystem should probably have the attribute, if that system is only intended to run in level 3. Of course, if you have a different TrapUpdateSystem for each of your game’s 250 levels, you should consider ways to combine those systems together.

If your project contains a large number of systems and updating them is having a noticeable effect on CPU performance, consider combining systems. Don’t be tempted to take this to extremes by combining everything into a single “Uber-system” - doing so would remove all of the modularity and flexibility from your data transformation pipeline, making code maintenance and bug fixing much more difficult. Look at individual systems within a system group, and if they share similarities in terms of the data they access and transform, consider combining them to reduce redundant checks and data access and to give you more control over job dependencies.